ISSUE 19.06 • 2022-02-07 HARDWARE By Ben Myers With solid-state drives (SSDs), the SMART ante is raised because an SSD can fail catastrophically — CLU

[See the full post at: Our world is not very S.M.A.R.T. about SSDs]

|

Patch reliability is unclear. Unless you have an immediate, pressing need to install a specific patch, don't do it. |

| SIGN IN | Not a member? | REGISTER | PLUS MEMBERSHIP |

-

Our world is not very S.M.A.R.T. about SSDs

Home » Forums » Newsletter and Homepage topics » Our world is not very S.M.A.R.T. about SSDs

- This topic has 204 replies, 37 voices, and was last updated 2 years, 11 months ago.

AuthorTopicViewing 57 reply threadsAuthorReplies-

Alex5723

AskWoody Plus -

Paul T

AskWoody MVPFebruary 7, 2022 at 3:31 am #2423656an SSD can fail catastrophically

So can an HDD, although they tend to give early warning as the mechanical bits wear. The only resolution is a regular backup and a new disk.

cheers, Paul

1 user thanked author for this post.

DaveBoston

AskWoody PlusFebruary 7, 2022 at 4:35 am #2423669Hi Ben,

Thanks for the good article on SSDs. I have a related problem in my MacBook Pro. I have the smallest SSD (128) and now discover it’s too small to take the next OS-X update. How do I determine if the SSD can be upgraded to 256/512 or if it’s soldered in? I found these specs for an upgrade kit but don’t see how to determine if my laptop is compatible using the printed part number – where can I find that? Macbook Pro 2017 running OS 10.14.6.

Compatibility:

MacBook Pro 13″ A1708

– MacBookPro13,1 Late 2016: MLL42LL/A (2.0 GHz Core i5)

– MacBookPro13,1 Late 2016: MLL42LL/A (2.4 GHz Core i7)

– MacBookPro14,1 Mid 2017: MPXQ2LL/A (2.3 GHz Core i5)

– MacBookPro14,1 Mid 2017: MPXQ2LL/A (2.5 GHz Core i7)Identifying Numbers:

– APN: 661-05112, 661-07586

– Printed Part #: 656-0042, 656-0045, 656-0045A1 user thanked author for this post.

-

Graham

AskWoody PlusFebruary 7, 2022 at 5:01 am #2423670 -

OscarCP

MemberFebruary 7, 2022 at 12:01 pm #2423783Graham: ” … is to open up the MacBook and take a look. You’ve got nothing to lose since you’ll be going in there anyway.”

Unfortunately, at least in the case of a laptop, one needs a special screwdriver to remove a whole bunch of tiny, tiny, tiny pentalobe screws, as well as correspondingly steady hands and good eyesight. “Open the Mac and have a look” is not good practical advice for Mac users that are no so neurologically and, or sensorily gifted enough, or have no hands-on experience doing things that amount to performing successful brain surgery in the dark.

I agree with Alex that “it can be replaced by a skilled user“, “skilled” being the operative word here.

And Mac laptops of post 2014 vintage have the SSD glued, I believe.

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AV -

Ben Myers

AskWoody PlusFebruary 7, 2022 at 6:32 pm #2423868 -

anonymous

GuestFebruary 14, 2022 at 9:07 am #2425169Another reason not to own Apple products.

1 user thanked author for this post.

-

anonymous

GuestFebruary 15, 2022 at 7:46 am #2425454Just another reason I have never, and never will own anything Apple. I am old, and started with personal computers in the very beginning, so very well remember the introduction of the IBM PC, which started the world of building your own computer with parts from many various sources. Apple was a highly proprietary system, with parts available ONLY from Apple, and they rapidly became the far second system. Years of building my own systems and working on many others led me down the anti Apple path.

I know Apple now has some good products for some people, but to me it would be almost impossible for Apple to make a second first impression.

1 user thanked author for this post.

-

-

-

Alex5723

AskWoody PlusFebruary 7, 2022 at 5:15 am #2423675The SSD on a none-touchbar MacBook Pro 2017 can be upgraded by Apple (propriety SSD), but can be replaced by a skilled user.

OscarCP

MemberFebruary 7, 2022 at 4:26 pm #2423848The model number, if that is what you mean by “part number” is engraved on a plate stuck to the bottom of the laptop.

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AVBen Myers

AskWoody PlusFebruary 7, 2022 at 6:28 pm #2423866Dave, Been there with a few MacBooks, Pro and Air. Your best bet is to go to everymac.com. https://everymac.com/ultimate-mac-lookup/ Enter the A-number and the EMC number, and the website will tell you what’s inside your MacBook. A MacBook Pro from 2017 is old enough that its SSD is not soldered, but the interface pinout is proprietary, so you need a proprietary Mac-compatible SSD, 256GB, 512GB or larger. OWC sells a lot of kits. iFixit has superb illustrated how-tos for Mac repair or replacement of parts, and I think that the company also sells Mac-compatible SSD kits. Some of these kits include the screwdrivers to remove the ever-so-special pentalobe screws. You can also get a cheap but serviceable kit with many screwdriver heads at Walmart, though there are kits easier to use.

I’ve done a few of these MacBook repairs, and they are not too difficult if you have the right tools and parts.

-

OscarCP

MemberFebruary 7, 2022 at 7:39 pm #2423887Ben Myers: True enough, one can get one of those kits. What one cannot get, if one does not have it already, is the skill to use it in the right way to operate on an expensive and possibly irreplaceable piece of hardware.

This is what I would do: Just find a decent shop and take it there. This may not be easy to find, but it is definitely easier to do than to try using a kit one does not know what to do with and, or lacks the necessary mental and physical skills to use it.

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AV

BobbyB

AskWoody LoungerFebruary 7, 2022 at 11:59 pm #2423915Just “popped the top” on a 2014 MacBook Pro to change the battery (came with tools perfect!) upgraded the drive to a 1tb while I was in there, thereby hangs a problem got a 256gb hanging around perfectly fine in all respects. You would think stick it in an enclosure and job done, well not exactly. OWC do kits that are the price of a “2nd Mortgage” but are good inc. tools etc and you get to use the old SSD/Flash drive in an enclosure, if you have a look around you’ll find that there are no compatible enclosures for your old drive for anything less than OWC’s prices (not cheap). Even a 128gb such as yours is a pretty useful SSD and still has years use left in it, even if you only use it as an ad hoc “Time Machine” backup drive its handy to have a bit of extra storage. Just a consideration. Definitely do your research before “popping the hood” Even if it means wading through tons of YouTube videos. @OscarCP’s links are extremely good sources.

Hope this helps a little

“pssst know anyone who wants to buy a slightly used 256gb Apple drive” 😉

anonymous

GuestDaveBoston

AskWoody PlusDaveBoston

AskWoody PlusMartyHs

AskWoody PlusFebruary 7, 2022 at 5:58 am #2423683Ben,

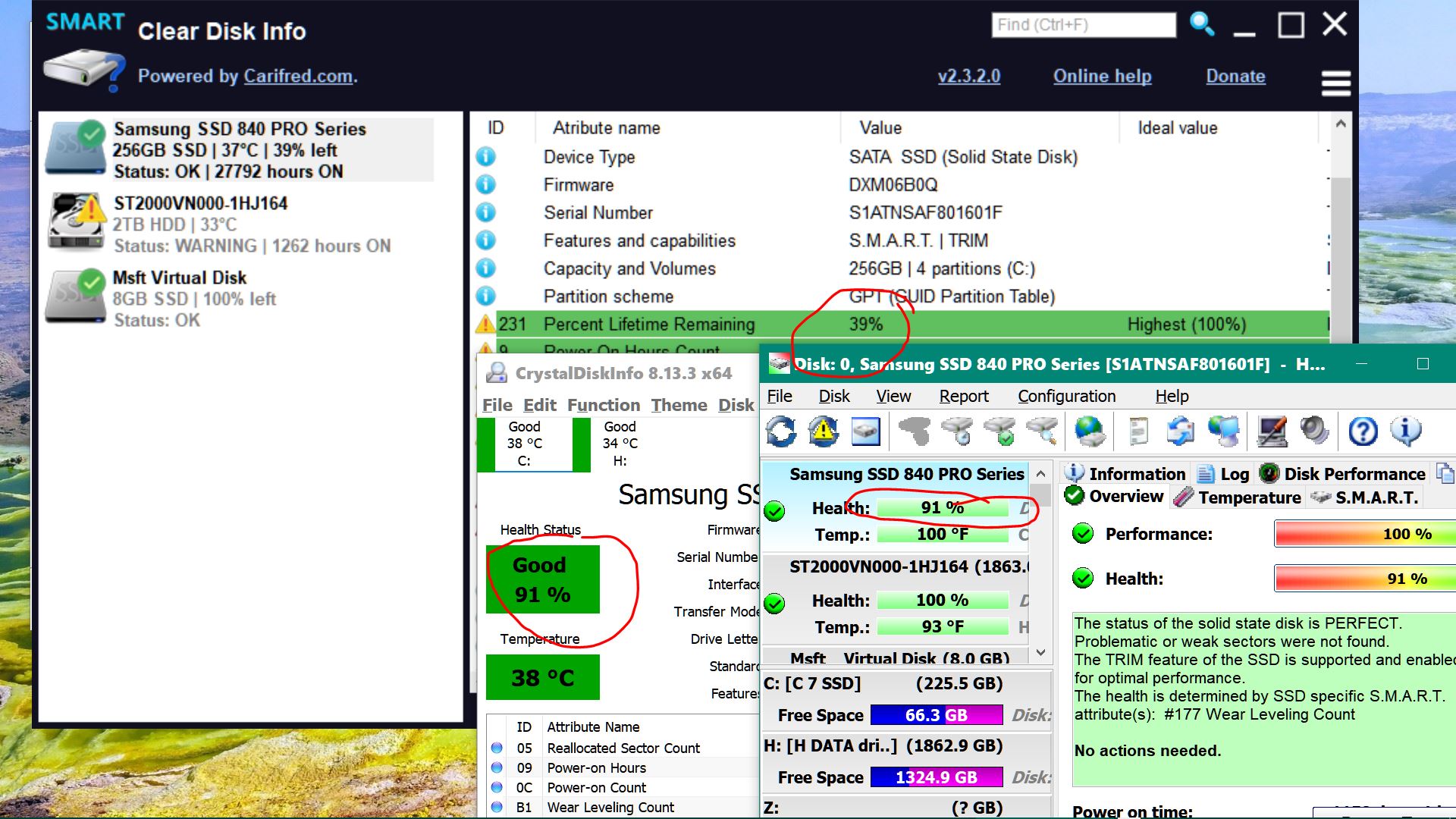

Great article ! … but I was a bit surprised you made no mention of over-provisioning and how it’s ‘supposed’ to affect the SSD life span. Attached are screenshots of my Samsung 840PRO, it’s SMART state and 8% OP. Any comments on how OP affects this conversation would be welcome … Thanks!

Marty

1 user thanked author for this post.

-

Ben Myers

AskWoody PlusFebruary 7, 2022 at 7:02 pm #2423869Intentionally, I tried to keep a laser focus on SMART, both to stay on track and also not to write too many words. Maybe you’re suggesting a follow-on article all about SSDs, explaining TRIM, overprovisioning and other SSD features?

1 user thanked author for this post.

-

MartyHs

AskWoody Plus -

Paul T

AskWoody MVP -

MartyHs

AskWoody PlusFebruary 8, 2022 at 8:30 am #2423960Hmmm … I get a very different conclusion from the article you referenced! It states:

“When cells become more trouble than they’re worth, fresh blood is called up from the SSD’s overprovisioned “spare” area. These replacement cells ensure the drive maintains the same user-accessible capacity regardless of any underlying flash failures.”

This is exactly what they show the Samsung 840 PRO doing (which happens to be the SSD in my Dell desktop!):

The article concludes for the 840 PRO:

“That reserve counter seems to be the best gauge for the 840 Pro’s remaining life. The wear-leveling count is supposed to be related to drive health, but it expired 1.5PB ago.

Given what’s supposedly still in the tank, 3PB doesn’t seem impossible.”

-

NetDef

AskWoody_MVPFebruary 8, 2022 at 11:40 am #2424001May have to agree to disagree. I’ve been mildly overprovisioning all SSD’s installed since 2014 in my custom builds. These workstations are under constant heavy use as CAD/Graphics/Rendering tools. Their drives with overprovisioning at 15% of available space consistently stay better performing over time than factory installed drives, and last much longer before replacement. We mainly use Samsung or Crucial SSD drives.

Additionally, I started doing the same for RAID arrays, and likewise drive replacement frequencies and dropouts on the arrays are far fewer than non overprovisioned sets.

But for a low level load for many home users, the benefit is not likely noticeable.

Also see https://www.anandtech.com/show/8216/samsung-ssd-850-pro-128gb-256gb-1tb-review-enter-the-3d-era/7

~ Group "Weekend" ~

-

-

-

-

SupremeLaW

AskWoody PlusFebruary 16, 2022 at 11:36 am #2425740I much appreciate your attention to S.M.A.R.T.

Our RAID controllers come with a GUI that logs anomalies with much better and much more useful error messages; and, yes those controllers also report S.M.A.R.T. data, but the latter is entirely useless: it never changes!

Given the state of affairs among the manufacturers you so well described, from practical experience I honestly believe that there will be more to gain from directing their attention to the poorly understood problem of cable defects and failures.

I mention this here because I have recently had two (2) entirely different cables fail completely: one was in an internal SFF-8087 fan-out SATA data cable, and the other was in a SFF-8088-to-SFF-8470 multi-lane external cable.

Let’s itemize the sheer number of mechanical connections in the latter:

(1) the edge connector on the add-in RAID controller card

(2) the multi-lane connection on the SFF-8088 that plugs into the controller

(3) the multi-lane connection on the SFF-8470 that plugs into an adapter in the external enclosure

(4) the SATA cables that connect to the internal side of the latter adapter

(5) the same SATA cables that connect to the SSDs and/or HDDs in that external enclosure.

When one of 2 x SFF-8088-to-SFF-8470 failed, I immediately suspected that a reliable HDD had failed. It took me a while to realize my error, because I should have suspected the cabling FIRST, and that would have saved me a lot of trouble-shooting time.

The test that worked was to switch those 2 cables, and the “failing” HDD started working again. So, I ordered 2 new cables, and now we’re back to normal.

More of this Story: I’m aware of some aging motherboard manuals that mentioned a technology for testing network cables. I may be out in left field, but I do believe the entire industry could benefit a LOT from the refinement of similar technologies that are dedicated to isolating failing and faulty cables, particularly data transmission cables.

p.s. Apologies if this “rant” is off-topic. I should have saved any relevant S.M.A.R.T. data that was recorded when our cables were failing; in the future, thanks to this excellent article, I intend to do so.

DaveBoston

AskWoody PlusFebruary 7, 2022 at 6:06 am #2423686I looked at a number of YouTube videos and some recommended removing one narrow cable and a screwed down clip, others said DON’T remove that cable but remove a larger one, before replacing the SSD. Anyone know about this? I think the point was to disconnect the battery but not sure why this would be necessary, and it’s liable to cause cable damage.

bbearren

AskWoody MVPFebruary 7, 2022 at 8:26 am #2423701With solid-state drives (SSDs), the SMART ante is raised because an SSD can fail catastrophically

I don’t pay any attention to SMART data. I don’t use Google, but Google uses a lot of drives. In a study of consumer-grade disk drives (pdf) published in 2007, Google found S.M.A.R.T data not so reliable a predictor of drive failure.

“Out of all failed drives, over 56% of them have no count in any of the four strong SMART signals, namely scan errors, reallocation count, offline reallocation, and probational count. In other words, models based only on those signals can never predict more than half of the failed drives.”

In 2007, at least, SMART data was equivalent to a coin flip for predicting failure.

On the other hand, I personally have had a number of drives fail, at least three catastrophically (one apparently had a short in the PCB and wouldn’t let the PC even POST). In none of those instances did I lose anything, because I had recent drive images of everything on the drive.

If one wishes to have real insurance against data loss, establish a regimen of regular drive imaging, and stick to it religiously, whether with HDD’s or SSD’s. Nothing else will save your bacon as effectively.

Always create a fresh drive image before making system changes/Windows updates; you may need to start over!We all have our own reasons for doing the things that we do with our systems; we don't need anyone's approval, and we don't all have to do the same things.We were all once "Average Users".AWRon

AskWoody PlusFebruary 7, 2022 at 8:54 am #2423712Thanks for a great article!

If I switch a Windows 7 computer with 16 GB of RAM to an SSD, can I help extend the life of the SSD by turning off the paging file?

For a “typical” office machine (email, MS Office, web browsing, moderate YouTube videos, etc), will that result in a performance hit? And if so, how much more RAM might be needed to compensate?

And does RAM wear out the same way an SSD does? 🙂

— AWRon

-

AlexEiffel

AskWoody_MVPFebruary 7, 2022 at 4:08 pm #2423836You should keep a minimal amount of pagefile to avoid issues. I had this discussion a while ago with ch100 here. I think 800MB was the value and that is what I would suggest as a minimum although I thought I used a lower value. Adding more RAM won’t change that. A fixed pagefile is best if it is enough to cover the needs when RAM is not enough. The issue is if you do run out of RAM, it will start swapping and then you will notice a huge slowdown on a mechanical hard disk, although I never seen how it impacts the performance when using a SSD, especially the fast NVMe kind. What can happen is if you do need more RAM and the pagefile is fixed and too small, you can run into issues and have the system break down on you.

The key here is not needing to use your full amount of RAM. I don’t think the pagefile is used that much because you make it bigger if not needed on Window. Unix is different and I am not sure about Linux today. If you use applications that need a lot of RAM and leave a ton of browser windows open for days, with memory leaks, you can end up using a lot of RAM. But 16GB seem plenty for normal usage browsing the web, no gaming and no heavy duty apps like video editing.

I monitor my RAM usage and never ran into issues, I restart my browser when it starts to eat up gigs after days being open on many tabs, but if you want to have peace of mind, you can let Windows manage your swap file. It will grow if needed. The fact you have a lot of RAM for your needs is probably the most important factor in all this. I’m not sure the rest makes much difference in your real life experience.

I’ve never heard of RAM wearing out due to use. It needs power to keep information, so I guess it always have power regardless of whether you write to it or not during use of your PC. I wouldn’t worry about this.

Just having 16GB and a SSD does the most for your performance.

-

Ben Myers

AskWoody PlusFebruary 7, 2022 at 7:06 pm #2423870Better than the paging file, turn off indexing! The paging file is there for the times when your computer runs out of memory, and it has to swap out data and program segments to make room for the program you just clicked on.

1 user thanked author for this post.

-

Paul T

AskWoody MVPFebruary 8, 2022 at 2:01 am #2423928can I help extend the life of the SSD by turning off the paging file?

There is no point trying to extend the life of an SSD.

They are very long lived and in normal use (anything not crypto generation) will give many years of service – sudden failure notwithstanding.

See this 7 year old article for more info.

https://techreport.com/review/27436/the-ssd-endurance-experiment-two-freaking-petabytes/cheers, Paul

-

SupremeLaW

AskWoody PlusFebruary 14, 2022 at 9:48 pm #2425368In our LAN hosting a dozen workstations, we’ve lately had nothing but cable failures and absolutely zero SSD failures, after we began to migrate from HDDs to SSDs several years ago.

Failing cables have included vanilla SATA data cables, and SFF-8088-to-SFF-8470 multi-lane cables to external storage enclosures.

S.M.A.R.T data was totally useless for troubleshooting our cable problems.

For that matter, Windows Event Viewer was reporting a “paging device error” but the faulty cable was connected to a brand new HDD that was not hosting pagefile.sys .

It was a long weekend recently, especially when that workstation would hang during POST with no apparent error messages.

I have to kick myself every time a drive has started acting up, only to confirm after trials-and-errors that the cable was the problem all along. LOL!!

-

SupremeLaW

AskWoody PlusFebruary 14, 2022 at 9:30 pm #2425361Optimizing pagefile.sys was a hot topic a few years before SSDs became cost-effective.

Here’s what we did on workstations that hosted multiple HDDs:

Starting with a second brand new HDD, we formatted a small NTFS partition in the primary position, that was dedicated to pagefile.sys .

Then, we created a contiguous pagefile.sys in that dedicated partition, using the excellent CONTIG freeware. Of course, we then needed to move the paging file from C: to our newly created contiguous pagefile.sys on that second physical HDD, primary partition.

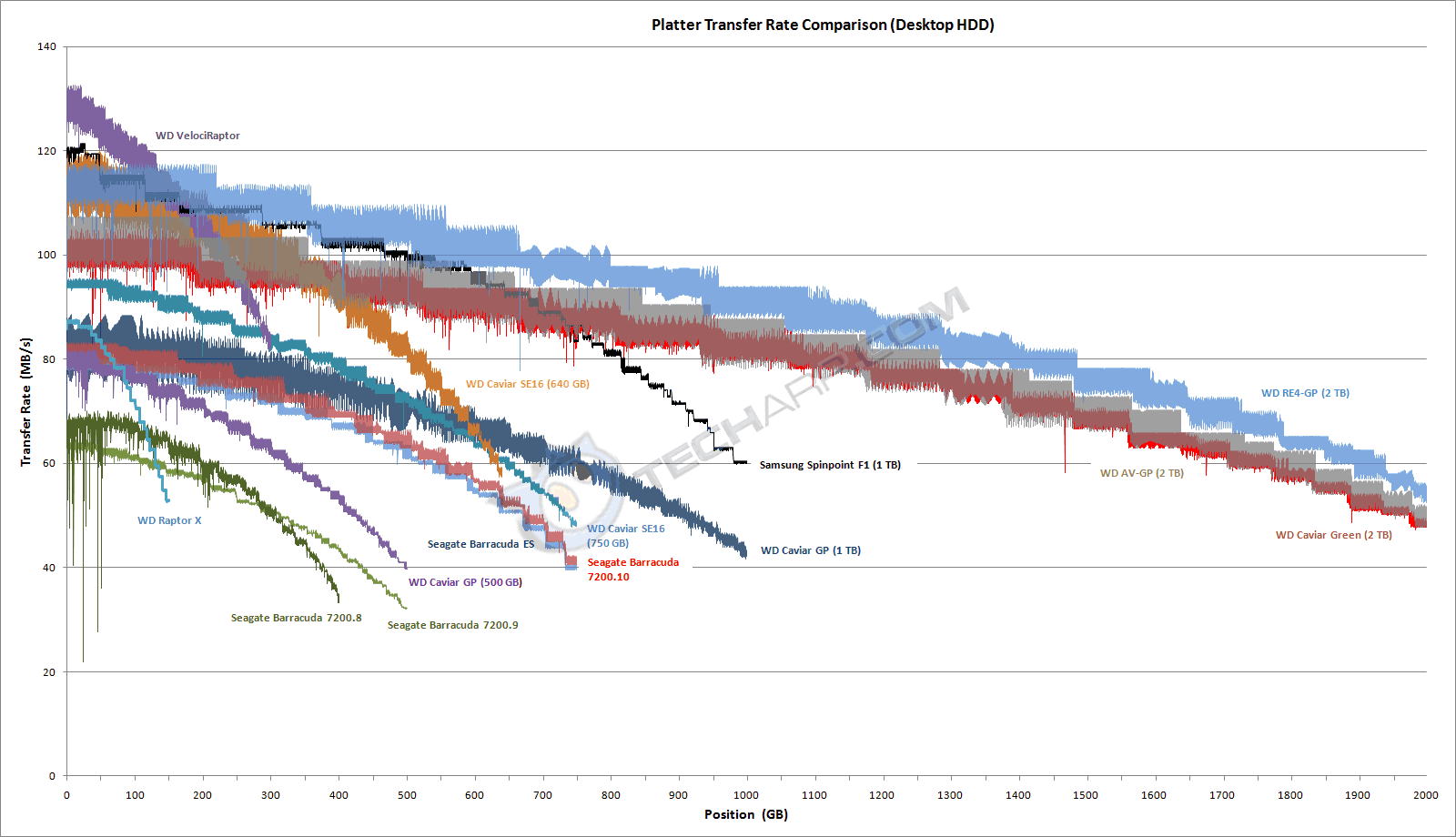

This design exploits the fact that the outermost HDD tracks are the fastest, mainly because HDD linear recording densities are almost constant, regardless of track diameter. Thus, the amount of raw data on any given track is directly proportional to track diameter.

CONTIG creates a pagefile.sys with perfectly contiguous sectors. In this fashion, paging I/O maps memory sectors to pagefile.sys sectors in perfect order, minimizing track-to-track armature movements aka “head thrashing”.

I haven’t seen any controlled scientific experiments with different pagefile.sys configurations on SSDs, and would love the opportunity to study any articles already published.

One theory that should be tested empirically is the benefit that obtains when a workstation can perform multiple I/Os in parallel across different drives. This setup should benefit from the practical reality of idle CPU cores, and threads, in a multi-core system.

Such a capability was generalized many years ago by enabling DMA (direct memory access) in peripheral controllers.

If you suspect your system is paging a lot, it’s worth a test to see if performance improves by moving pagefile.sys to a SSD where no other I/O is being performed concurrently with paging.

Along those same lines, a RAID-0 array of multiple NVMe SSDs should perform paging noticeably faster than a JBOD SSD: 2 array members should be almost twice as fast, and 4 array members should be almost 4 times as fast as a single JBOD drive.

We upgraded a refurbished HP workstation with a 4×4 add-in card using 4 PCIe 3.0 Samsung NVMe drives in RAID-0, and it performs READs in excess of 11,000 MB/second.

On that system, we let Windows 10 manage paging automatically. That workstation is wicked fast for our routine database tasks, so fast in fact that we decided we really didn’t need to host our database in a ramdisk any longer.

Hope this helps.

RVAUser

AskWoody PlusFebruary 7, 2022 at 9:33 am #2423721Trying to “spread the word” about this newsletter, I posted the link to this article on FB. So I was surprised to see that, while it displayed the title of the article, it also displayed a Twitter logo and advertised 1Pass.

If a friend of mine had posted this, my first thought would have been that this is a phishing scam. Can y’all do anything about this?

-

Susan Bradley

ManagerFebruary 7, 2022 at 11:26 am #2423772Not sure where the twitter icon is coming from , but the 1password is the ad in the free edition.

https://www.askwoody.com/newsletter/free-edition-our-world-is-not-very-s-m-a-r-t-about-ssds/ I’ll test and see what’s going on.

Susan Bradley Patch Lady/Prudent patcher

Intrepid

AskWoody PlusFebruary 7, 2022 at 10:32 am #2423746I highly recommend Argus Monitor because: 1. It works with SSDs; 2. It continually checks S.M.A.R.T data in background; and 3. It notifies the user if there is a problem with no false alarms. Excellent product – been using it for over 10 years.

https://www.argusmonitor.com/overview_fan_control.php

Note: Scroll down to the bottom of this page for the SMART info. The publisher recently decided to emphasize the fan control capabilities of the product for marketing purposes as most people don’t even use SMART. But the product started as SMART monitoring app when it was released in 2009 and now works almost all available HDDs / SSDs. The author also keeps it updated as new motherboard HD/SSD controllers are released.-

Ben Myers

AskWoody PlusFebruary 7, 2022 at 7:12 pm #2423873Argus Monitor has flown under my radar because it is portrayed as temperature monitor-and-control software, with SMART almost as a throw-in. I just installed it and it displays SMART data for an NVMe SSD, passing the acid test that Speccy and the Linux Disks command fail. The free version is fully functional or close, for 30 days. There are other programs to display system temperatures, CPU and GPU and even SSD or HDD, but this one is quite well done. I wonder how often it samples SMART data, or if it is simply on demand?

I would much like to see a program that samples SMART data once a day or provides it on demand. Then we all would not need divine intervention by Microsoft to incorporate SMART monitoring and reporting into Windows, sort of.

anonymous

GuestFebruary 7, 2022 at 11:20 am #2423696Thank-you for an excellent SSD article. I will be downloading the Clear Disk Info utility program. In addition to my multiple weekday image and file backups, I ‘clone’ my system SSD to an external HDD monthly. The ‘data’ SSD gets copied monthly. A small effort to protect against a catastrophic event.

anonymous

GuestFebruary 7, 2022 at 11:20 am #2423709anonymous

GuestFebruary 7, 2022 at 11:20 am #2423720I recently installed a samsung evo 500gb drive. downloaded and installed the ‘magician’ software and got the “selected drive does not support this feature’ message for a number of the magician topics. No thanks to samsung i found the solution was to update the the drive’s firmware. Afterwards the “selected drive….” messages stopped appearing.

the magician software is deficient regarding explanations about settings. And certainly was deficient about needing firmware update.

anonymous

GuestFebruary 7, 2022 at 11:21 am #2423761There is a way to care for your SSD and HD drives that will extend their lives, recover bad sectors, refresh entire drives, is easy, and has been proven effective and safe for many years. (Not for Mac though.)

Just get SpinRite from Gibson Research and run it periodically. That’s all. It will save your sanity and hardware. End of worries!

Go to https://grc.com and read up. Lots of other good stuff there also.

It is a reasonable one time cost – no annual subscriptions – updates are free except for infrequent major versions. I’ve been running version 6.0 since 2005 and version 5.0 before that. Cost to upgrade from 5 to 6 was $29. If you get the current version 6.0 now the update to 6.1 is free and coming soon. It sounds like it will be awesome.

You’re welcome.

1 user thanked author for this post.

-

Ben Myers

AskWoody PlusFebruary 7, 2022 at 7:21 pm #2423880SpinRite is great for what it does, but it does not deal with a hard drive with a progressively worsening number of bad sectors afterwards. Let’s say, for example, that a system with a spinning hard drive gets a bit of a bump, and the drive read-write heads momentarily touch the surface of the drive, scratching off some of the magnetic coating. SpinRite may be able to take care of the immediate problem, but the etched-off coating is now floating around inside the drive, with the possibility of an abrasive effect on other areas of the drive platter(s). There’s a reason drives are assembled and disassembled in clean rooms, absolutely free of dust and dirt.

I cannot advocate using SpinRite on an SSD, but Steve Gibson is better prepared to advise all of us on that topic.

1 user thanked author for this post.

-

NetDef

AskWoody_MVPFebruary 8, 2022 at 11:28 am #2423995Spinrite will likely induce added wear on an SSD based on how it works. Having said that, as a last resort to data recovery, with intent to copy or clone that data to a replacement drive, and with intent to retire the damaged SSD after recovery is complete, running it in Spinrite Level 2 can be highly effective.

Sources:

https://twitter.com/sggrc/status/289454123005390848https://community.spiceworks.com/topic/352439-is-spinrite-still-an-option-in-todays-world

Of course, if you are keeping good backups, you won’t need to go down that road.

~ Group "Weekend" ~

1 user thanked author for this post.

-

anonymous

GuestFebruary 14, 2022 at 9:01 am #2425145Steve Gibson does not claim that Spinrite will make any drive last forever. In my opinion, he provides the best tool to handle your storage device intelligently and to know when to replace it.

1 user thanked author for this post.

-

OscarCP

MemberFebruary 7, 2022 at 12:09 pm #2423784Very interesting and timely article on an important issue.

Question: Is there a version of Clear Disk Info for Macs? If not, what else would be its equivalent?

My MacBook Pro ca. mid-2015 in: About this Mac/System Report/Storage provides only this SMART information on the SSD: “Verified”, or “Failing.”

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AVWSstarvinmarvin

AskWoody LoungerFebruary 7, 2022 at 3:16 pm #2423823My 960GB Sandisk Extreme Pro has been in daily use for 7 or 8 years, and is used for everything including games, some video and photo editing, Office, internet, and even as a DVR for for several months until we got a dedicated 3TB WD Red HDD. Sandisk Toolbox says it still has 98% life remaining. It’s been the main drive in 2 different PCs, and it still runs fine. The 10-year warranty is a nice touch, too.

Going all the way back to the Kingston V100 96GB SSD we’ve never worn out an SSD. An early Mushkin 240GBGB SSD did fail suddenly after 18 months use, and they replaced it with a newer model which has an upgraded controller. That’s the only one I’ve ever seen fail.

Despite my confidence in SSDs we still make regular backups on Portable HDDs which we plug into a USB port to do the backup then unplug it for safekeeping (screw those ransomware bad guys!).

2 users thanked author for this post.

-

SupremeLaW

AskWoody PlusFebruary 14, 2022 at 10:06 pm #2425370We’ve now doing the same thing with an excellent StarTech 3.5″ external enclosure that supports 2 interfaces: eSATA and USB 3.0 . The metal enclosure is cool, trayless and front-loading.

Yes, it only accepts 3.5″ SATA HDDs, but HDD cost per gigabyte has dropped enormously as SSDs are now preferred for speed.

We just spent $80 on another brand new WDC 2TB “Black” HDD, which was close to $300 several years ago.

The other benefit of these external enclosures is a separate ON/OFF switch: we now switch that external enclosure ON just long enough to run our backup task, then we switch it OFF.

This policy should mean that this 2TB HDD will run reliably for most of the 5-year warranty period, and Western Digital does honor their factory warranties, provided a new WDC drive is registered at their on-line warranty database.

1 user thanked author for this post.

packeterrors

AskWoody PlusFebruary 7, 2022 at 3:45 pm #2423829My 960GB Sandisk Extreme Pro has been in daily use for 7 or 8 years, and is used for everything including games, some video and photo editing, Office, internet, and even as a DVR for for several months until we got a dedicated 3TB WD Red HDD. Sandisk Toolbox says it still has 98% life remaining. It’s been the main drive in 2 different PCs, and it still runs fine. The 10-year warranty is a nice touch, too.

Going all the way back to the Kingston V100 96GB SSD we’ve never worn out an SSD. An early Mushkin 240GBGB SSD did fail suddenly after 18 months use, and they replaced it with a newer model which has an upgraded controller. That’s the only one I’ve ever seen fail.

Despite my confidence in SSDs we still make regular backups on Portable HDDs which we plug into a USB port to do the backup then unplug it for safekeeping (screw those ransomware bad guys!).

Agreed. I ran a Windows 2003 Server with some SSD in RAID-1 configuration for 8 years without an issue. As we were replacing the server I took the old one home formatted the drives and installed Windows XP on the system with C: and D: no raid. Then a few years later I gave the computer to a friend that was in need of a computer. The hardware finally died due to motherboard overheating, but it wasn’t due to SSD failure. I know SSDs do fail as I’ve had a couple fail but for the most part, I’ve had much fewer SSD failures than I did with mechanical drives. My current computer has 4 years on it with the same SSD drives. The one drive says I’ve transferred 33.1 TB on it so far and no signs of a failure yet.

1 user thanked author for this post.

edlight

AskWoody Plus-

Ben Myers

AskWoody Plus

OscarCP

MemberFebruary 7, 2022 at 4:36 pm #2423851I don’t play games, never keep many windows open for more than an hour, or keep the laptop on for more than 8 – 12 hours at a single stretch, before turning it off and calling it a day. I do watch movies and shows, both streaming and from my DVD collection. I do, now and then, some heavy number crunching in several up to half hour runs a day, for my job. My computer is described in my signature panel, below.

Any idea of how likely is this use to wear out an SSD and which one of the above would be the main culprit for this to happen?

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AV-

Ben Myers

AskWoody PlusFebruary 7, 2022 at 7:34 pm #2423886The usage of your MacBook that you’ve portrayed seems pretty unlikely to wear out the SSD with excessive write operations. Is this the original SSD, or one that was installed after the initial purchase? If you installed it yourself in 2020, it is still pretty new. You’ve got 16GB of memory there, cutting down on SSD writes, compared to the budget Macs with 4GB or 8GB. Great screen!

Leaving a computer on generally has no effect on the SSD or hard drive. Ditto watching movies and shows. If the number crunching makes intensive use of the SSD, it is perhaps the major risk factor.

Keep on!

1 user thanked author for this post.

-

OscarCP

MemberFebruary 7, 2022 at 10:13 pm #2423898Ben Myers: The original 1 TB SSD is by now 4.5+ years old and has left 570 GB of free space. In “Verified” condition, according to Apple’s reading of the SMART data, that I take to mean “it’s OK, for now.” The other message would be “Failing” or in plain English: “No good, back up everything, clone SSD and, since you don’t trust yourself, with good reason, to fix this, run to a not too distant repair shop you find on the Web, where they may know what to do, or not, but shall charge you anyway.” There is no third message.

Thanks for your advice and for starting this discussion.

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AV

-

SupremeLaW

AskWoody PlusFebruary 14, 2022 at 10:19 pm #2425375Without knowing a LOT more about your daily usage patterns, I venture to guess that environmental factors will make a much bigger difference for longevity, chiefly power conditioning, temperature control and cooling, and moisture control.

All our workstations are powered by APC battery-backup units, and they’ve saved my butt many times already.

Case in point, during the past 6 months, we’ve been slowly upgrading to 2.5 and 5.0 GbE dongles and add-in cards, to inter-operate smoothly with a TP-LINK high-speed switch.

The initial testing we did was to move several large drive images of C: across our LAN.

I should have expected this, but I was still surprised when that TP-LINK switch failed less than 30 days after purchase: my best guess is that it just over-heated.

So, the factory replaced it, and we jury-rigged 2 simple fans blowing cross-wise over that switch. With both fans running 24/7, we’ve had no more problem with the replacement TP-LINK switch.

Moral of this story: power conditioning and temperature control are both BIG DEALS!

wdburt1

AskWoody PlusWSRandyH

AskWoody LoungerFebruary 7, 2022 at 10:50 pm #2423885Hi Ben..

I read the article on SSDs, so tried ClearDiskInfo.exe.

I use Crystal Disk Info, and they give different results!The disks ClearDiskInfo.exe. says are not perfect,

Crystal Disk Info says are okay, and vice-versa.

You can get Crystal Disk Info from https://crystalmark.info/en/

I would be interested in what you think is happening.

Thanks.-

Ben Myers

AskWoody PlusFebruary 7, 2022 at 11:46 pm #2423913What I think is happening are differing interpretations of the same data, because there is no agreed upon standard for SSD SMART data attributes. SKHynix is unwilling to release a technical description of how it uses SMART data in SSDs, as I noted in the article. Other manufacturers are not always forthright about SMART either.

3 users thanked author for this post.

-

SupremeLaW

AskWoody PlusFebruary 16, 2022 at 12:01 pm #2425744One theory I maintain about S.M.A.R.T. is the proprietary nature of controllers embedded in SSDs.

How such a controller defines and detects “errors”, and what such a controller does upon detecting “errors”, are a set of functions that are designed and implemented by the firmware programmers.

I understand entirely how a major manufacturer like Samsung would want those details to remain CONFIDENTIAL, because they are Samsung’s intellectual property, legally speaking.

Consider the obvious hoopla Intel generated upon initially announcing “Optane” SSDs.

I specifically remember comparing Samsung’s PCIe 3.0 NVMe M.2 SSDs.

The benchmarks repeatedly showed the Samsung’s NVMe M.2 SSDS were consistently averaging ~3,500 MB/second doing READs.

If we do the math, that performance came amazingly close to the theoretical maximum throughput aka “MAX HEADROOM”:

8G / 8.125 bits per byte x 4 lanes = 3,938.5 MB/second MAX HEADROOM

(jumbo frame is 128b/130b i.e. 130 bits / 16 bytes = 8.125 bits per byte)

By comparison, Intel’s first batch of “Optane” M.2 SSDs only used 2 PCIe 3.0 lanes, which immediately crippled their performance.

Samsung had every right to keep the details of their NVMe success completely and entirely CONFIDENTIAL, while filling zeroes in the S.M.A.R.T. data cells.

-

ECWS

AskWoody PlusFebruary 7, 2022 at 10:50 pm #2423891-

ECWS

AskWoody Plus

anonymous

GuestFebruary 7, 2022 at 10:50 pm #2423896You computer jocks seem to be so tied up in your computerese that you miss the most *basic* issue with SSDs: the physics of the devices. The devices are limited by the depth of the potential wells into which electrical charges are placed to store data.

If the wells are not deep enough, thermal effects can cause charge to leak out. Boltzmann’s law gives that probability for each potential well; given the large numbers of such wells (bits), the probability of data corruption becomes nonvanishing. Moreover, if the temperature increases, the probability of leakage from the wells increases – exponentially.SSDs are suitable only for short-term storage.

2 users thanked author for this post.

-

NetDef

AskWoody_MVPFebruary 8, 2022 at 3:38 pm #2424057This is in fact true.

Most modern SSD’s, when stored (no power) under 70F (21C) will retain their integrity for at least one year. Longer is possible but YMMV depending on the NVRAM type, quality, batch, etc. Too many variables. Higher storage temps can greatly shorten that metric.

We use SSD’s as live operating drives, and HDD’s for archival storage – either powered or cold.

For long storage (over one year but under ten) we prefer CMR HDD drives for speed and ease of backup restoration. For even longer time periods and for relatively small data sets (25GB per optical disk) we use archival grade Blu-Ray disks – or in the case where terabytes of long term storage are needed we rotate storage for critical records to new HDD media on a schedule.

Tape appears to be making a comeback for long term storage, but I can’t get over my mis-trust of that media from the bad old days . . .

If you use a home backup solution – I always recommend a USB HDD based removable drive, and always have two. One online to receive backups and one offline. Rotate regularly. Put the in-service start date on a label on these drives, and replace them if they get wonky or older than five years.

~ Group "Weekend" ~

6 users thanked author for this post.

-

DaveBoston

AskWoody PlusFebruary 8, 2022 at 3:53 pm #2424058NetDef, can you clarify please?

“Most modern SSD’s, when stored (no power) under 70F (21C) will retain their integrity for at least one year. Longer is possible but YMMV depending on the NVRAM type, quality, batch, etc. Too many variables. Higher storage temps can greatly shorten that metric”

I am new to SSDs but I have thumb drives a decade old or more that are still working fine. Surprised that silicon would be unstable unless you are talking about solder fingers growing between leads and I thought getting read of PbSn solder fixed that, too.

Inquiring minds…

-

mshunfenthalaw

AskWoody Plus

-

-

-

SupremeLaW

AskWoody PlusFebruary 14, 2022 at 10:27 pm #2425378Do you have any experience with M-DISCs?

Christopher Barnatt at YouTube put one to a torture test: he wrote data to an M-DISC then put it in a freezer for a few days. After it thawed, Chris was still able to read all the raw data without any errors.

We recently purchased a few slim ODD writers that are supposed to write M-DISCS too, but we haven’t gotten around to trying any for large files like drive images of C: .

1 user thanked author for this post.

-

NetDef

AskWoody_MVPFebruary 16, 2022 at 12:31 pm #2425755We have been using M-Disks for a few years now, but am now researching alternatives. It’s becoming apparent that the media is getting hard to find, and the smaller DVD sized capacities seem to be discontinued.

The DoD torture tested M-Disks a few years ago and results seemed pretty good.

Media obsolescence as well as their readers is a major concern. If I have a disk rated for hundreds of years, and if that data matters, will anyone be able to read it then?

But . . . this is starting to develop into an entirely new topic, one that is VERY interesting but might deserve it’s own thread.

~ Group "Weekend" ~

2 users thanked author for this post.

-

lmacri

AskWoody PlusFebruary 16, 2022 at 2:20 pm #2425789But . . . this is starting to develop into an entirely new topic, one that is VERY interesting but might deserve it’s own thread.

Agreed. Before posting again users might want to re-read Ben Myer’s original article at at Our World is Not Very S.M.A.R.T. About SSDs regarding the ability (or inability) of diagnostic tools like Clear Disk Info and Samsung Magician to report meaningful S.M.A.R.T. data and accurately predict potential SSDs failures. Please don’t stray too far off-topic.

-

-

OscarCP

MemberFebruary 9, 2022 at 6:22 pm #2424313I do agree with the physics, as explained, but not with the conclusion: I, for example, have been using the SSD in my Mac (see technical specs in my signature panel) for more than 4.5 years with no trouble whatsoever and I am certainly not the only one on planet Earth that can make such a claim. If 4.5+ years is “short-term”, I wonder what “long-term” might be: a decade, century, a millennium, and aeon?

As to making regular backups to, in my case, an external HDD, yes, of course, that is much recommended as standard practice whether one has an SSD or an HHD drive inside one’s machine’s box.

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AVWSstarvinmarvin

AskWoody LoungerFebruary 9, 2022 at 8:45 pm #2424324We have the following SSDs in daily use since:

Kingston V+100 96GB – 2011

Kingston V+200 64GB – 2012

Intel X25-M 80GB – 2012

Sandisk Ultra 120GB – 2013 (failed in 2015; replaced under warranty)

Sandisk Extreme 240GB – 2013

Sandisk Extreme Pro 960GB – 2014

Samsung 840 250GB – 2014

WD Blue 3D 500GB – 2018

WD Blue 3D M.2 500GB – 2020

Sandisk Extreme Pro, Samsung 840, and WD Blue 3D 500GB are all heavily used for large game download/play/delete/repeat, video and photo editing and, for the Sandisk Extreme Pro, recording/viewing/deleting/repeat TV programs.

Thus, 7 of the SSDs listed above have seen daily usage for 8 years or more, with several of them worked hard. The shortest remaining life among them all is the Kingston V+200 at 74% remaining. That’s not short term.

Sandisk Extreme Pro and Samsung 850 Pro (we don’t have one) were both designed with professional use involving frequent heavy write activity. They both have a 10-year warranty which is not pro-rated.

In the same period we’ve seen two Seagate HDDs fail (a 1TB and a 2TB), plus a Toshiba 1TB laptop HDD.

I repair or troubleshoot computers for several friends, none of whom have experienced a single SSD failure.

An Intel rep told a seminar which I attended that most (but not all) SSD failures are a result of controller failure, not the NAND memory chips. Of course, if you write enough TBs of data for long enough, then an SSD will surely cease working. Typically, that would be longer than you ever keep a computer. Cheers!

Paul T

AskWoody MVPFebruary 10, 2022 at 3:36 am #2424371miss the most *basic* issue with SSDs: the physics of the devices.

That’s why manufacturers use ECC. Loss of data from a few cells does not affect data integrity.

If you want to test – and have the disk self correct – the data on a stored disk, simply read all the files from the disk.

See this post for more details:#2318643cheers, Paul

OscarCP

MemberFebruary 7, 2022 at 10:50 pm #2423897OK, I followed Ben Myers advice here #2423866 : “Your best bet is to go to everymac.com. https://everymac.com/ultimate-mac-lookup/ Enter the A-number and the EMC number, and the website will tell you what’s inside your MacBook.” advice, found the A model number under the laptop and the EMC number looking at a list here:

https://everymac.com/systems/by_capability/mac-specs-by-emc-number.html

So I did: The A number, 1398, is not unique to a particular computer model, but to several, from 2013 though 2015. The EMC was more useful, and I found with it that the SSD is not soldered by clicking on a link in the EMC page to get here:

And, from there here, and to the point:

Conclusion: my Mac’s SSD is not soldered:

“Apple does not intend for end users to upgrade the SSD in these models themselves. The company even has used uncommon “pentalobe” screws — also called five-point Torx screws — to make the upgrade more difficult. However, access is straightforward with the correct screwdriver, the SSD modules are removable, and Apple has not blocked upgrades in firmware, either. There are two significantly different SSD designs for these models, though.

Specifically, the “Mid-2012” and “Early 2013” models use a 6 Gb/s SATA-based SSD whereas the “Late 2013,””Mid-2014” and “Mid-2015” models use a PCIe 2.0-based SSD. These SSD modules are neither interchangeable nor backwards compatible with earlier systems.

As a result, third-parties, like site sponsor OWC have released a 6 Gb/s SATA-based SSD upgrade with a compatible connector for the “Mid-2012” and “Early 2013” models and another PCIe 2.0-based flash SSD with a compatible connector for the “Late 2013” and subsequent MacBook Pro models.

By default, from testing the “Late 2013” and “Mid-2014” models, OWC discovered that when a “blade” SSD from a Cylinder Mac Pro is installed in one of these systems, it “negotiates a x4 PCIe connection versus the stock cards, which negotiate a x2 PCIe connection.” This means that these Retina MacBook Pro provided more than 1200 MB/s drive performance, a huge jump from the standard SSD.”

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AV-

Ben Myers

AskWoody PlusFebruary 8, 2022 at 9:14 am #2423969One small bit of caution here. I may have overstated the ease of replacement of a proprietary Mac NVMe SSD. Physical replacement of an SSD is the easy part. Remove pentalobe screws from the bottom, remove bottom cover, remove small capacity SSD, install large capacity SSD and replace cover and screws.

Installing a fresh copy of MacOS is straightforward (Command-R when powering up), as long as one has a working internet connection to give access to the Apple mother ship.

If you want to clone the smaller capacity SSD to the larger SSD thereby retaining all your data and licensed programs, life gets more complicated. Easiest way is to use the Disk Utility in the MacOS Recovery System to clone the smaller SSD to an external USB drive, then use it again to clone from the external drive to the newer high capacity drive.

There are USB adapters that allow one to attach proprietary Mac SSD to a USB port, but it makes no sense to buy one unless one does this sort of job repeatedly.

Paladium

AskWoody PlusFebruary 8, 2022 at 6:50 am #2423944oldergeeks.com seems like its unstable. Can’t even reach the page to d/l the file. Major Geeks has it if anyone else has problems.

https://www.majorgeeks.com/files/details/clear_disk_info.html

Kathy Stevens

AskWoody Plus-

Ben Myers

AskWoody PlusFebruary 8, 2022 at 9:18 am #2423971Really good surge protectors or a UPS of ample capacity! Really good power supplies offer some protection for the rest of the computer. The magnitude of the surge, usually when power is restored, has a lot to do with it. Here in the US, a surge of 130v is almost negligible. 200v will destroy not just an SSD, but most everything else inside a computer.

1 user thanked author for this post.

-

Kathy Stevens

AskWoody PlusFebruary 8, 2022 at 9:44 am #2423976We use CyberPower OR1500pfclcd surge protectors.

They are part of CyberPower’s PFC Sinewave UPS series and we use them to support our work stations, modems, and routers.

Specifications are as follows:

- Power Rating 1500 VA/1050 Watts

- Topology: Line Interactive

- Waveform: Sine Wave

- Plug type & cord: NEMA 5-15P, 6 ft. cord

- Outlet types: 8 x NEMA 5-15R

- Communication: USB, Serial

- Management software: PowerPanel Business Edition

- Warranty: 3 year

- Connected Equipment Guarantee: $200,000

For less expensive/sensitive equipment we use Tripp Lite Isobars. The Tripp Lite isobars are not as robust as the CyberPowerbut units but come with $50,000 Ultimate Lifetime Insurance. We have used the insurance three times over the last 20 years to replace electronics that have failed due to power surges (television, WIFI radio, and microwave).

3 users thanked author for this post.

-

NetDef

AskWoody_MVPFebruary 8, 2022 at 11:31 am #2423997We’ve been using that exact model of Cyberpower’s UPS for years now with excellent results for workstations and network racks in problematic power situations.

Unfortunately sourcing new replacements (and even their batteries) has become very hit and miss this year, not to mention their prices have gone way up.

~ Group "Weekend" ~

-

Kathy Stevens

AskWoody PlusFebruary 8, 2022 at 1:39 pm #2424032If you are in the US/Canada, have you tried finding a Cyber Power reseller by going to their web site at https://www.cyberpowersystems.com/reseller-search/ .

The page has links to their resellers including, but not limited to, Amazon, B&H, Best Buy, CDW, Costco, Fry’s, Home Depot, Newegg, MicroAge, Office Depot, Provantage, Staples, Walmart, etc.

1 user thanked author for this post.

-

-

-

Paul T

AskWoody MVPFebruary 9, 2022 at 1:16 am #2424175A power surge will sound the death knell for SSD drives

A power surge only affects the PSU, which isolates and provides regulated power to the other components. The PSU is designed to cope with a range of input voltages, but too much or too little will cause the PSU to provide the wrong voltage to internal components. Some internal components will tolerate the improper voltage and some won’t, but none will be unaffected in some way.

If you need reliability or have power supply issues, there are two things you need to do.

Backup regularly, because no solution is 100% effective.

Add a UPS in front of the PC(s) to limit the voltage changes / provide power during low / no power times.cheers, Paul

2 users thanked author for this post.

-

Kathy Stevens

AskWoody PlusFebruary 9, 2022 at 10:37 am #2424227We had a HP Windows 10 work station that was exposed to a power surge about a year ago.

Installed a new SSD , used its recovery software to restore it to an as new condition, reinstalled its software, and recovered its working files from a backup.

The machine has been working fine ever since.

Thus me comment related to SSD drives and their exposure to power surges.

2 users thanked author for this post.

-

Paul T

AskWoody MVP -

SupremeLaW

AskWoody PlusFebruary 15, 2022 at 12:42 pm #2425536Our AC power cabling always enforces the following “policy”:

CHEAP links BURN first

EXPENSIVE links BURN last

Thus, at the 110V wall outlet, we plug in a relatively cheap surge protector with multiple outlets. Ideally, the later surge protector has a separate ON/OFF switch for each outlet.

Next in line is a high-quality UPS / battery backup unit: we’ve been using the APC brand for years, and they work great, particularly if their PowerChute Personal software is installed with the required USB cable installed correctly.

Last in line is the quality PSU that we try to install in every workstation that we build. Presently, our hands-down favorite is Seasonic brand. Their Tech Support is superb.

If a surge burns the surge protector but leaves the UPS intact, you’re out maybe another $10 to $20.

If a surge burns the UPS too, you’re out maybe another $100.

If a surge burns all the way thru the PSU, your system probably suffered the direct hit of a lightning strike: but, the good news is that the latter event is highly improbable, and there are other ways to shield computers from such worse-case events.

Just our “policy” here. Hope this helps.

1 user thanked author for this post.

-

Ascaris

AskWoody MVPFebruary 8, 2022 at 9:50 am #2423978With a conventional hard drive, there are two kinds of failures that are possible (roughly speaking)… mechanical failure and electronic failure. The various moving parts will eventually wear out, and that will necessarily cause problems.

Whether you get any warning about this is a luck of the draw thing. You might, but it’s by no means certain. Even if there is some warning, it doesn’t mean there won’t be any data loss by the time you are aware there is a problem.

The second kind of failure is an electronics failure. Hard drives have RAM (for buffering/caching) and CPUs like a PC (or a SSD), and these components can fail. When this happens, it is quite likely to be of the “bolt from the blue” type that no one saw coming.

SSDs, of course, have no moving parts, so the first kind of failure can’t happen. The second kind can, though. It’s not as obvious, but there is also a difference between a media wear-out failure and any other electronics failure, in that one is an expected, fairly predictable thing that you can see coming from the SMART data, while the other electronics failure can’t really be predicted by SMART, just as with the hard drive whose electronics fail suddenly.

A lot of people are put off by the ticking time bomb aspect of the SSD, where you can watch it losing its life from the moment you start using it. The hard drive is a ticking time bomb too… you know it’s going to fail at some point, but there’s no inkling of when it might be, so it’s easier to kind of think of it like it isn’t going to happen (until it actually does).

I’ve got one SSD that I’ve beaten on pretty hard since I bought it (a Samsung 840 Pro 128GB). I’ve had the swap/paging file on it for the whole time I have had it, and Firefox used to have some pretty nasty memory leaks that caused it to consume all of my RAM very frequently, and it was only the speed of the SSD that kept the system limping along until I could close Firefox and get the RAM back. Still, that now 8 year (and one month) old drive is still in service. The drive is now down to 67% of its rated service life, and it has had just over 42,000 power-on hours.

Note that the rated life doesn’t mean that the drive just dies when the clock runs down to zero. It was like this with at least one model of Intel drive (the electronics would turn the drive to read-only instantaneously, and once it was powered down, it would brick itself, so you could not even use it in read-only mode to get your data), but in the famous Tech Reports test of SSDs, where lots of drives were written to until they died, the 840 Pro was only about half done when it got to its officially worn-out status.

The most recent hard drive failure I had was the sudden, bolt-from-the-blue type. I was upgrading my Asus laptop (Core 2 Duo era) from XP, and while the drive had a lot of hours on it (~23k, if I recall) and was approaching five years old, it still worked perfectly, with no SMART data worries and no misbehavior of any kind. It was a reliable workhorse.

And then, during the installation of 7, it quit. No noise, no warning at all. It never read or wrote another byte of data. The warranty was five years, so I contacted Seagate and sent it back under warranty, just under the wire.

That drive had a lot of hours and a lot of years, at ~22,000 and nearly 5, respectively, but my Samsung 840 Pro SSD has it beat, with three more years and nearly double the active hours. It will fail someday, but I have backups, so I will leave it in and keep getting my money’s worth out of it all these years later!

Dell XPS 13/9310, i5-1135G7/16GB, KDE Neon 6.2

XPG Xenia 15, i7-9750H/32GB & GTX1660ti, Kubuntu 24.04

Acer Swift Go 14, i5-1335U/16GB, Kubuntu 24.04 (and Win 11)2 users thanked author for this post.

Ben Myers

AskWoody PlusFebruary 8, 2022 at 3:24 pm #2424050Urged on by discussion here, I tried an Inateck all-purpose USB 3.0 adapter for hard drives, bought a couple of months ago, for the first time. It is all purpose because it handles SATA drives and both notebook 44-pin 2.5″ and desktop 40-pin 3.5″ parallel ATA drives. ClearDiskInfo shows the SMART attributes for the SATA drive I connected up, but Speccy gets confused. So the Inateck implements the USB Attached SCSI Protocol (UASP) to allow SMART data to be seen over USB 3.0. I have not yet put it to the acid test of seeing SMART data for a PATA drive. The Inateck unit is affordable at around $30, well worth it for me.

1 user thanked author for this post.

Ben Myers

AskWoody Plus-

OscarCP

MemberFebruary 8, 2022 at 6:04 pm #2424087Ben Myers: What is an USB adapter? How is it used? Inside a computer or outside of it? I have never heard of it, or seen one, other than the one in the photo shown by you. I may not be the only one here in the same situation. Perhaps you could explain? If you did, I’ll thank you.

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AV -

Ben Myers

AskWoody PlusFebruary 8, 2022 at 11:55 pm #2424150Best to do show, along with tell. There are three types, all attaching to a USB port on a computer.

For most purposes, the Inateck one shown in a photo previously, handles a 2.5″ SATA solid state drive as well as other kinds of drives, both old-time spinning and SSD. Attach a SATA drive to the SATA connector, provide power to the Inateck, connect the Inateck adapter to a USB port with a cable.

For any of these gizmos, the drive shows up as an external drive, either on a MacOS, Windows or Linux computer.

I do not have photo for the second one, to which an NVMe SSD is attached, and which also plugs into the computer’s USB port with a cable.

The third one is intended only for MacBook SSDs, which use a proprietary, Apple-only edge connector, very different from a standard NVMe SSD. Same idea here. Attach your MacBook SSD to the small board, then connect the small board to a USB 3.0 port with the supplied cable, aluminum housing optional. The photo shows one very similar to the one(s) I have here for MacOS data recovery.

2 users thanked author for this post.

-

Cybertooth

AskWoody PlusFebruary 8, 2022 at 11:56 pm #2424153@OscarCP, here is an Amazon.com page for the product Ben referenced.

This thingy lets you hook up IDE and SATA drives to a computer via a USB cable. You plug the desired drive in the appropriate connector, connect one end of the USB cable to the dock and the other to the computer. All connections are external, no opening of computer cases is required.

I don’t have this exact device, but I have others like it, as well as a couple of docking stations like this one. These serve much the same purpose as the Inateck; some models feature dual SATA connectors but this one in particular has one SATA and one IDE.

1 user thanked author for this post.

-

OscarCP

MemberFebruary 9, 2022 at 1:09 am #2424172Thanks Ben Myer and Cybertooth:

“… All connections are external, no opening of computer cases is required.”

I like that. So this is one way to, among other things, copy (or clone) the Mac SSD to another, external (at least at the time) SSD. Without attempting major surgery on the Mac. (Instead of doing that and then finding fewer pentalobe screws than the ones one removed to open the Mac …)

Good to know. I’ll keep this in mind.

Ex-Windows user (Win. 98, XP, 7); since mid-2017 using also macOS. Presently on Monterey 12.15 & sometimes running also Linux (Mint).

MacBook Pro circa mid-2015, 15" display, with 16GB 1600 GHz DDR3 RAM, 1 TB SSD, a Haswell architecture Intel CPU with 4 Cores and 8 Threads model i7-4870HQ @ 2.50GHz.

Intel Iris Pro GPU with Built-in Bus, VRAM 1.5 GB, Display 2880 x 1800 Retina, 24-Bit color.

macOS Monterey; browsers: Waterfox "Current", Vivaldi and (now and then) Chrome; security apps. Intego AV -

SupremeLaW

AskWoody PlusFebruary 14, 2022 at 10:43 pm #2425387One of the problems that less experienced users may not appreciate initially, is insufficient DC power that is provided by any given USB port.

This problem occurs most frequently with older USB 2.0 ports, but it can also occur with newer integrated USB 3.0 ports if the motherboard’s chipset doesn’t meet the minimum current specifications.

The easiest way around this non-obvious barrier is a “Y” USB adapter cable that plugs into 2 x USB ports: one transmits data, and the other simply supplements the USB device with extra DC power.

This problem becomes obvious if one is installing a USB add-in card into an empty PCIe expansion slot: if the card has a Molex or SATA power connector, a power cable from the PSU needs to plug into that connector to provide the USB port with all the DC power any device could possibly need.

1 user thanked author for this post.

-

-

-

WSstarvinmarvin

AskWoody LoungerFebruary 9, 2022 at 2:09 am #2424182 -

DaveBoston

AskWoody Plus

-

SupremeLaW

AskWoody PlusFebruary 15, 2022 at 6:04 pm #2425625Speaking of USB ports, many modern motherboards come with 2 entirely different types of USB connectors.

One type is most often available at the rear I/O panel where RJ-45 and audio ports are also located.

Another type is much less obvious because it consists of pin headers that are mounted vertically at the factory, at right angles to the motherboard plane.

As such, those pin headers are available for customizing a PC’s USB cabling options.

One popular way of utilizing those pin headers is to attach a compatible cable that adds more USB ports to an otherwise empty 3.5″ or 5.25″ drive bay.

Similarly, a brand new chassis should come with one or more of those cables, to activate USB ports mounted in the chassis front panel.

For a less popular example, a simple adapter cable attaches the block connector at one end to an integrated pin header, and the other end is a standard USB Type-A connector.

We recently deployed the latter setup by “concealing” a 256GB flash drive inside a small form factor HP workstation. That flash drive plugs directly into the Type-A connector on the latter simple adapter cable.

Even though the latter flash drive is only USB 2.0, it still works fine for doing routine backups of third-party system software. And, cable management is no problem because that adapter cable is only about 8″ long overall.

One could, for example, maintain the latest drive image of C: on such a “concealed” USB flash drive. It won’t be as fast as USB 3.0, but the convenient availability recommends this design highly.

Kathy Stevens

AskWoody PlusFebruary 8, 2022 at 4:38 pm #2424068We often look to the manufactures warranty policy for an estimate of the useful life of equipment.

In the case of Western Digital, they offer a 5-year limited warranty on their 250 GB, 500GB, 1 TB, 2 TB, and 4 TB WD Blue SATA SSD 2.5”/7mm cased internal drives.

1 user thanked author for this post.

-

SupremeLaW

AskWoody Plus

anonymous

GuestFebruary 9, 2022 at 7:46 am #2424206Thank you for this timely article having just installed my first NVMe in a new build. It’s a Samsung 980 Pro 500GB. Initially I installed Linux on it, just to test the rig and it ran OK.

I’ve now copied a working Windows 7 partition onto a HD (not the SSD) and boot from that. The rig (a MSI B550M with AMD Ryzen™ 7 3800X does not support Win7 so I installed some drivers from AMD marked Samsung: secnvme.sys and secnvmeF.sys). Windows Disk Mgt sees the drive as 512MB EFI System Partition (heathy) and 465.2GB Primary Partition (healthy).

I’ve just started Win 7 and Crystal Disk is showing Red Bad for the SSD. Does Crystal Disk expect the SSD to have Windows on it (e.g. does it need the Windows drivers)?

The Red is against Available Spare 0000000000 and Yellow against Percentage Used 00000000FF. Other attributes are Unknown

Alan

-

Ascaris

AskWoody MVPFebruary 9, 2022 at 3:02 pm #2424285The drivers do not need to be on the SSD. If they are installed in Windows, that’s all that is needed, regardless of where Windows lives.

I would say the drive is fine. The SMART implementations are notoriously varied, and CrystalDiskInfo is probably just misinterpreting the numbers it sees. If it were being reported bad by the utility from the drive manufacturer, though, I would contact them for assistance. You can always do that if you are concerned, but if it were mine I’d be fine with it. I am always making backups, though, just because failures do happen.

On that topic, I took my Acer Swift 1 out of mothballs (not literally) last night to check and see if Windows was still installed on it, and it was, so I started to boot it to check something, and it got about halfway there and then turned off. Power plugged in, but battery stopped charging, and it won’t respond to anything.

I only mothballed the Swift because its role was taken by my Dell XPS 13, but it had been working fine until then.

Failures happen, and often with zero warning. This time it was presumably the Swift’s motherboard (as it encompasses nearly everything), but it could have as easily been a SSD or a hard drive.

Dell XPS 13/9310, i5-1135G7/16GB, KDE Neon 6.2

XPG Xenia 15, i7-9750H/32GB & GTX1660ti, Kubuntu 24.04

Acer Swift Go 14, i5-1335U/16GB, Kubuntu 24.04 (and Win 11)2 users thanked author for this post.

-

WSstarvinmarvin

AskWoody LoungerFebruary 9, 2022 at 3:39 pm #2424290While unlikely, it is just possible that the un-mothballed ACER Swift needed to be plugged in for 10 minutes to an hour before turning it on. Sometimes electronics that have been in storage for a while like to sit in a warm, dry location so that any condensation, however slight, has a chance to evaporate. Also, some battery operated devices need at least a few minutes on charge before powering up.

-

wavy

AskWoody PlusFebruary 9, 2022 at 11:31 am #2424238🍻

Just because you don't know where you are going doesn't mean any road will get you there.2 users thanked author for this post.

-

Paul T

AskWoody MVP -

Ben Myers

AskWoody PlusFebruary 10, 2022 at 1:40 pm #2424459To repeat what I stated in my article, if there were standards for SSD SMART attributes and their meaning, all of the software that looks at them would have a consistent way of interpreting what they mean. There are no SSD SMART standards today, so every SSD manufacturer does what it wants, and most do not bother to tell us what they do. As a result, every piece of software that looks at SMART has latitude to interpret results differently. And they do, as seen by all the commentary here.

If anything, this is a worse embarrassment for the computer industry than Microsoft Windows, Apple MacOS and various Linux flavors (at least the ones I’ve tested) failure to grapple with timely notifications of hard drive and SSD reliability issues, before we all have to cope with BSODs, unbootable Macs and kernel panics.

1 user thanked author for this post.

-

SupremeLaW

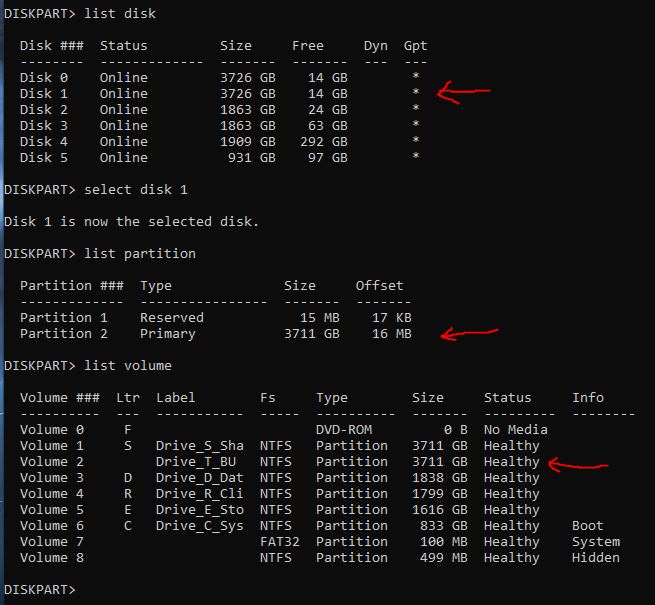

AskWoody PlusFebruary 15, 2022 at 3:00 pm #2425570We got frustrated with the lack of “granularity” in the Error checking options available in “My Computer | Properties | Tools”.

So, we “bit the bullet” and wrote a complex BATCH program that passes command line options to the CHKDSK command, and runs that command on all partitions active in any one workstation.

C: gets handled differently in that BATCH program, chiefly it skips C: if the user has requested CHKDSK to “fix” errors with the “/f” option.

That’s no big deal, because CHKDSK can always be launched separately to “fix” C: (requiring a RESTART).

CHKDSK is smart enough to inform the User if either a DISMOUNT or RESTART are required.

Here’s a typical invocation of our DODISKS.bat BATCH program:

dodisks /v /c /i /x

Each of those command line options is explained in the CHKDSK help:

chkdsk /?

For example, “/x” forces a dismount without requiring User intervention. Without that option, CHKDSK will prompt the User to confirm a dismount. Either way, the partition is re-mounted when CHKDSK is done checking it.

When DODISKS.bat finishes, we simply scroll back to the top and search visually for any reported anomalies. Then, it’s a piece o’ cake to run CHKDSK again on each partition that may need “fixing” of some kind.

In this way, DODISKS.bat can run unattended. Or, if I have nothing else to do, I can sip a fresh cup of coffee while I watch it plow thru all active partitions in one of our workstations.

It would be nice if future versions of CHKDSK supported an option to write anomalies in a .txt file that is specified on the command line.

-

-

Kathy Stevens

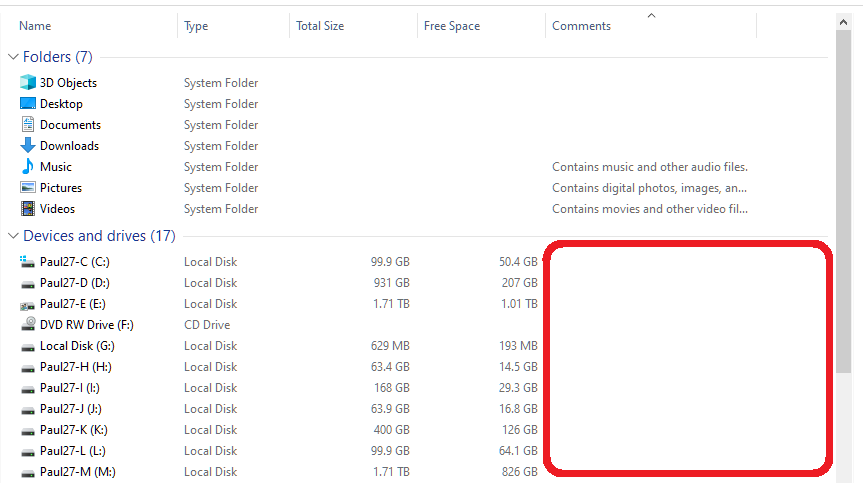

AskWoody PlusFebruary 9, 2022 at 7:20 pm #2424320Based upon the information contained in this thread, I am beginning to understand why HP is configuring/delivering our new workstations with two drives:

- 256 GB TLC Solid State Drive

- Size: 256 GB

- Interface: SATA

- Type: TLC Solid State

- Width: 2.5 in (6.35 cm)

- 2 TB HDD

- Size: 2 TB

- Interface: SATA

- Rotational speed: 7200 rpm

Our practice has been to replace the 256 GB SSD with a 2 TB SSD for operating software and storage while using the second 2 TB traditional drive for backups.

Moving forward we will keep the 256 GB SSD for Windows and programs such as Microsoft Office, use the traditional 2 TB HDD to provide stable data storage, and add a third traditional drive for backups.

Thank you all for your participation in this thread

-

Paul T

AskWoody MVPFebruary 10, 2022 at 3:01 am #2424368I can’t see how you reach that conclusion from the posts above.

The most likely reason for a smaller SSD and decent HDD is purely cost.Do you have experience of the replacement SSDs dying?

Having the HDD as backup seems to be a very sensible way to mitigate SSD failure. Even keeping the original SSD and making an image of it to the HDD whilst using the HDD for data is good mitigation.On the other hand, a couple of new 2TB SSDs at around $200 starts to look like a decent 2 disk NAS with UPS. 4 of them is a 4 disk unit with snapshot backup for true ransomware protection.

cheers, Paul

-

Ben Myers

AskWoody PlusFebruary 12, 2022 at 9:33 am #2424827This HP configuration largely matches the setup in my customized Dell Precision T5810 with its 256GB SSD for programs and regularly used stuff and a 2TB traditional hard drive to keep all the data I use. One thing to consider is customizing the hard drive setup to cycle the drive down when it has not been used for a while. The drive remains in a hot standby state, ready to cycle up again when needed, albeit with a few seconds delay. The idea is to save wear and tear on the drive motor and bearings. You can set this up in the Windows Power Options.

1 user thanked author for this post.

-

SupremeLaW

AskWoody PlusFebruary 15, 2022 at 3:31 pm #2425583An external USB-cabled enclosure is also useful if it has a separate ON/OFF switch.

With that enclosure switched ON, we’ve come to make heavy use of the XCOPY command in Command Prompt.

XCOPY works equally well if one is copying an entire top-level folder and/or some sub-folder below a top-level folder.

In this next simple example, XCOPY updates a backup copy of a top-level folder named “website.mirror”:

E:

cd \

xcopy website.mirror X:\website.mirror /s/e/v/d

Where,

X: is the Windows drive letter for a partition in the eXternal enclosure.

Now, as happens with our library website, we may only need to update the “authors” sub-folder in that library. XCOPY works the same way on sub-folders too:

E:

cd \

cd website.mirror

xcopy authors X:\website.mirror\authors /s/e/v/d

Finally, because the latter requires more typing than is absolutely necessary, we also wrote 2 custom BATCH programs named GET.bat and PUT.bat that launch XCOPY with the required command-line options e.g.:

cd \

cd website.mirror

put authors X

The GET.bat and PUT.bat BATCH programs take care of “parsing” the command-line options required by the XCOPY command.

-

Mele20

AskWoody LoungerFebruary 10, 2022 at 8:26 am #2424408It seems all reports of low remaining life are from Clear Disk.

I would consider Clear Disk to be suspect until proven otherwise.Clear Disk is weird but for the opposite reason you mention. It claims Remaining life is 100% on a four year, two month old, Toshiba NvMe 500 GB. That reading makes no sense. Crystal DiskInfo says the health of the drive is “good” at 84%. That seems more credible.

bbearren

AskWoody MVPFebruary 10, 2022 at 12:37 pm #2424445Always create a fresh drive image before making system changes/Windows updates; you may need to start over!We all have our own reasons for doing the things that we do with our systems; we don't need anyone's approval, and we don't all have to do the same things.We were all once "Average Users".-

Ben Myers

AskWoody PlusFebruary 10, 2022 at 1:48 pm #2424462Yesterday’s New York Times had a piece by industry veteran John Markoff front, top and center of the business page addressing the issue that as circuits inside chips get closer and closer together, and smaller of course, the odds increase for random electrical leaks leading to equally random totally unpredictable failures of all kinds. This applies not just to CPUs, but also to memory and all kinds of other chips. Maybe the industry stops at 7nm processes? 5nm?

2 users thanked author for this post.

-

OscarCP

MemberFebruary 10, 2022 at 3:54 pm #2424489Ben Myers: “Maybe the industry stops at 7nm processes? 5nm?”