|

In this issue PUBLIC DEFENDER: You’re fired if you don’t know how to use GPT-4 Additional articles in the PLUS issue • Get Plus! MICROSOFT NEWS: Microsoft 365 Copilot announced ONENOTE: What’s wrong with OneNote — and what you can fix FREEWARE SPOTLIGHT: Temp_Cleaner GUI — Just what I was looking for ON SECURITY: Who controls our tech?

PUBLIC DEFENDER You’re fired if you don’t know how to use GPT-4

By Brian Livingston Mainstream media outlets are ablaze with news about GPT-4, OpenAI’s enormously powerful artificial-intelligence engine that will soon be shoehorned into every nook and cranny of Microsoft 365. Suddenly, knowing how to “prompt” (program) a generative AI app has become an essential requirement for your job or your life. God help us. We’ve all been instantly transported into the 25th-century world of Star Trek’s Jean-Luc Picard. You may think we’re still in the year 2023. But now — by entering just a few words — you can propel your personal starship through the galaxy at Warp 9. Or you can remain stuck in place and be assimilated by the Borg. Resistance is futile. There’s more than one way to spin an app

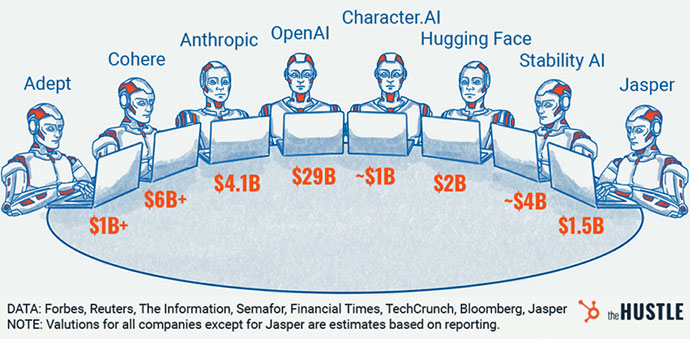

OpenAI has lately been the star of most media stories about artificial intelligence. This is largely because Microsoft poured over $10 billion into the company and then launched an embarrassing experiment: bolting a buggy GPT-3.x onto the Bing search engine. (See my February 27 column.) But OpenAI is not the only black hole your speedy starship needs to watch out for. At least eight artificial-intelligence startups are already unicorns, boasting a valuation of more than $1 billion each, according to an analysis by The Hustle. (See Figure 1.)

An initial public offering of OpenAI could give it market dominance with a valuation of $29 billion. Due to Microsoft’s stake, the Redmond company would own 49% of the newly public corporation. However, OpenAI’s ChatGPT app, which achieved 100 million monthly active users within two months — a faster uptake than TikTok, the world’s most popular download in 2022 — may not end up being the winner of the race. Contenders such as Anthropic, Cohere, and Stability AI are already well past mere unicorn status, each having valuations in the multiple billions of dollars. All this may remind you of the 1999 Internet bubble. Back then, any company that appended “.com” to its name instantly soared in value. If that makes today’s gold rush seem familiar to you, you’re not alone. Just among the hundreds of companies associated with the business incubator called Y Combinator, at least 50 are developing AI. Separately, four former Google engineers recently created Mobius AI. The newborn company received funding from Andreesen Horowitz and Index Ventures valuing the group at $100 million just one week after its formation, according to a New York Times article. To the victor go the spoils — but will there even be a victor?

Whether or not OpenAI eventually crushes its competition will depend on the company’s new shiny object: GPT-4, which was released on March 16. Version 4 of the company’s “Generative Pre-trained Transformer” is turning out to be a notable advance. It compares well against the borderline-psychotic entity known as GPT-3.5, which Microsoft famously bet Bing’s reputation on. As I wrote in my previous column, we all laughed uproariously — or shook our heads in horror — at Bing AI’s failings. One day, it proclaimed it was deeply in love with a Times columnist. On another day, it threatened to somehow go to court to sue tech reporters who had revealed tricks that succeeded in “jailbreaking” the chatbot, thus lifting its built-in conversational guardrails. Outbursts such as these are well known to data scientists and are called hallucinations. Artificial-intelligence programs have a bad habit of becoming obsessed with them. OpenAI was apparently scrutinizing and learning from Bing AI’s shocking rants. In a release-day technical report, the company asserts that ”GPT-4 significantly reduces hallucinations relative to previous GPT-3.5 models.” (arXiv abstract, PDF) More important to those of us who want to use — or will be forced to use — the bot’s verbal ability is GPT-4’s vastly improved understanding of facts. According to OpenAI’s report, GPT-4 did better than 90% of human test-takers on the US Uniform Bar Exam. It outscored 93% of them on the college-admission test known as SAT Evidence-Based Reading & Writing (EBRW). On more than a dozen similar exams, GPT-4 — with or without its new vision-aware component — performed far better than GPT-3.5 (See Figure 2.)

At first glance, GPT-4’s improvement on exams looks impressive. On the other hand, this may not represent better performance in a real-world setting, such as a search-engine assistant. Scoring in the 90th percentile on the Uniform Bar Exam could simply mean that GPT-4 can search its “large language model” for the answers thousands of times faster than a human can retrieve the information from a test-taker’s stressed-out brain. Do you fear that GPT-4 will cost knowledge workers their jobs? If so, you can shed crocodile tears over the bot’s poor performances on the Advanced Placement English Literature and English Language exams. GPT 3 and 4 score better than only 10% to 15% of humans on those tests. What do you see in these three pictures?

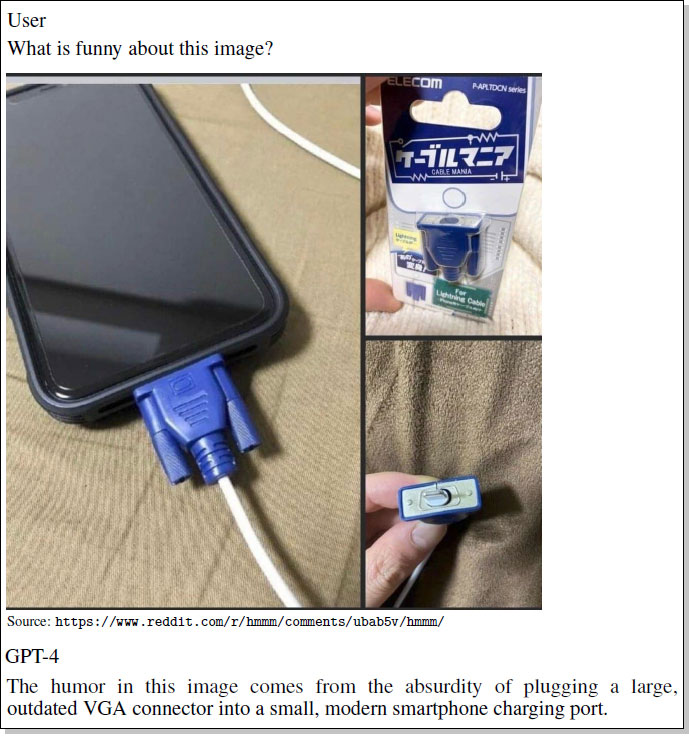

One of GPT-4’s most surprising improvements over its predecessor is a new visual-input capability. Put simply, you can submit to GPT-4 a photo or a mix of photos and text. The chatbot will often be able to make sophisticated descriptions of the content and what’s in the images.

In Figure 3, the trick is to relate the Lightning charging connector in the lower-right photo to the incongruous VGA cable in the left photo. Younger readers may never have seen a VGA cable. (Cables for DVI ports first came out around 1999, HDMI in 2004, and DisplayPort in 2008.) But GPT-4 wasn’t stumped by the odd technology in the pictures. The possibilities for career advancement are unlimited

Let’s set aside for a moment the scientific rankings of AI skills. What most people want to know is how much they must now learn about chatbots to get their work done — or even to just get by in the world. Consider the following exciting job descriptions of the future:

Bad actors will always be with us. Unfortunately, chatbots are all too willing to output malware when asked by professional cybercriminals to do so. How could a chatbot determine which projects are illegitimate? The same way a human does. Bots should evaluate the purpose of any code, determine whether it includes a Trojan horse, and so forth. What, bots aren’t that advanced? Maybe they’re not really safe for wide distribution yet. To develop legitimate skills, try a legitimate front end to different bots

Perhaps a life of crime is not your personal goal. If so, you should try many distinct chatbots. An alternative, lesser-known bot may contribute more to your work and your life than one that happens to be more heavily promoted. The Platform for Open Exploration (Poe) is an app that gives you access to multiple bots: GPT-4 by OpenAI, Claude and Claude+ by Anthropic, and more. It’s a service from Quora, a social website where users exchange questions and answers with each other. Poe’s paid level is $9.99 per month. That’s a bargain, since it lets you use multiple bots, whereas OpenAI’s premium tier at $20/month provides only ChatGPT Plus. It’s early in Poe’s development, so the app is currently available only on iOS devices such as iPhones and iPads, as well as M1/M2 Macs. Quora’s CEO, Adam D’Angelo, says a Windows app is coming soon, as well as access to more premium chatbots. For more information, read D’Angelo’s announcement or visit the Poe website directly. (The site requires a valid phone number for registration.) Who will set and enforce serious ethical standards for intelligent agents?

I wrote in my February 27 column that generative AI would be the greatest technology breakthrough since Gutenberg’s movable type, which made inexpensive books widely available. Economist Raoul Pal, CEO of Global Macro Investor, goes even further. He calls AI “the biggest technology shift humanity has ever faced,” perhaps “exceeding that of the nuclear bomb itself.” (Benzinga) After their use in World War II, nuclear weapons spawned a host of safeguards, including the Nuclear Nonproliferation Treaty, secret keys and codes, and other protocols. To use a different example, prescription drugs are far less powerful than nuclear arms. But in most countries, new drugs must go through double-blind testing and be proven safe and effective before they are released to the public. It may be that intelligent bots will truly have more impact than the invention of nuclear weapons. If that’s the case, who will ensure that tests for high ethical standards are required before these unpredictable and sometimes erratic agents are let loose on the world? It looks like it won’t be Microsoft. The company fired the remaining seven employees in its Ethics and Society team this month. In a tape-recorded meeting, John Montgomery, corporate vice president of AI, said pressure from the company’s top executives “is very, very high” to take the latest OpenAI models “and move them into customers’ hands at a very high speed,” according to a Platformer report. The guardian of ethics also might not be OpenAI. As you may have heard, the organization was started in 2015 as a nonprofit group. A pantheon of venture capitalists — including Sam Altman, Greg Brockman, Reid Hoffman, Jessica Livingston (no relation), Peter Thiel, Elon Musk, Olivier Grabias, Amazon Web Services, Infosys, and YC Research — pledged more than $1 billion. The firm committed itself to collaboration with other AI researchers and to make its patents public. Musk alone personally donated $100 million to what was then a high-minded charity. (Wikipedia) In 2019, however, the nonprofit — OpenAI Inc. — created a for-profit corporation, OpenAI LP. OpenAI Inc. is the only controlling shareholder of the profit-making entity. Musk has said he and others wanted an open-source ethical AI research center to balance Google, which has its own private AI effort. But on March 15, Musk tweeted that OpenAI “somehow became a $30B market cap for-profit. If this is legal, why doesn’t everyone do it?” In reality, it’s common for a nonprofit to own a for-profit subsidiary. A charity may actually be required to set up a for-profit to keep unrelated business income separate and thereby avoid losing tax-exempt status. (LegalZoom) In this case, though, the spin-off smells to high heaven. The MIT Technology Review published in 2020 the definitive story on OpenAI’s switcheroo. “The AI moonshot was founded in the spirit of transparency,” author Karen Hao wrote at the time. “Competitive pressure eroded that idealism.” Alberto Romero, an analyst at CambrianAI and editor of The Algorithmic Bridge, added an insightful follow-up to MIT’s reporting one year later.

OpenAI’s March 16 technical report seems to continue the discouraging trend. The white paper “makes it feel like it’s open-source and academic, but it’s not,” says William Falcon, CEO of Lightning AI and creator of an open-source Python library (which does not compete with OpenAI). “They describe literally nothing in there,” which is the opposite of the company’s previous papers, he said in a Venture Beat interview. An AI researcher with Ecole des Ponts Paris Tech, David Picard, said in a tweet, “Please @OpenAI, change your name ASAP. It’s an insult to our intelligence to call yourself ‘open’ and release that kind of ‘technical report’ that contains no technical information whatsoever.” Ben Schmidt, vice president of information design at Nomic AI, tweeted, “I think we can call it shut on ‘Open’ AI: the 98-page paper introducing GPT-4 proudly declares that they’re disclosing *nothing* about the contents of their training set.” A better name for OpenAI might be Not-OpenAI. Since we all know how expensive signage can be, the for-profit entity could save money by shortening the new name to NopeAI before affixing it atop its corporate headquarters. You simply move the last letter of “open” to the beginning. No need to waste venture capital on any new neon-lighted fixtures. I used a Web-design site, Zarla, to create a new logo and tagline for the company. At left, the old logo is on top. (Wikimedia Commons) The new design appears in the middle. You can see that the brand looks great on T-shirts, too, in Ben Franklin green. (Zarla 3-D simulation; no actual T-shirts were harmed.) We’ll be hearing a lot more about generative artificial intelligence in the months and years to come. I hope humanity is ready to deal with it.

The PUBLIC DEFENDER column is Brian Livingston’s campaign to give you consumer protection from tech. If it’s irritating you, and it has an “on” switch, he’ll take the case! Brian is a successful dot-com entrepreneur, author or co-author of 11 Windows Secrets books, and author of the new fintech book Muscular Portfolios. Get his free monthly newsletter.

The AskWoody Newsletters are published by AskWoody Tech LLC, Fresno, CA USA.

Your subscription:

Microsoft and Windows are registered trademarks of Microsoft Corporation. AskWoody, AskWoody.com, Windows Secrets Newsletter, WindowsSecrets.com, WinFind, Windows Gizmos, Security Baseline, Perimeter Scan, Wacky Web Week, the Windows Secrets Logo Design (W, S or road, and Star), and the slogan Everything Microsoft Forgot to Mention all are trademarks and service marks of AskWoody Tech LLC. All other marks are the trademarks or service marks of their respective owners. Copyright ©2023 AskWoody Tech LLC. All rights reserved. |

||||||||||||||||||||||||||